Qianxu Wang

Robotics, 3D Vision

I am a fourth-year undergraduate student at Peking University, advised by Prof. Yixin Zhu and fortunated collaborate with Prof. Yaodong Yang at PKU and Prof. Leonidas J. Guibas at Stanford remotely. In 2024 summer, I worked as a visiting research student at Stanford, advised by Prof. Jeannette Bohg.

My long-term research goal is to achieve human-level robust sensorimotor coordination in robotics. I am also very interested in 3D Vision and Animation. My previous research has primarily focused on dexterous manipulation from a semantic perspective.

Currently, I am thinking and exploring two key questions in manipulation:

What are the sources of knowledge for manipulation?

-

Shared information across datasets. The features of Cross-embodiment, cross-environment, and cross-quality make robotic datasets unique compared to data in other fields like vision and natural language. Defining a universal data format and unifying existing datasets, rather than solely collecting new ones, presents a promising approach to fundamentally addressing data scarcity in robotics. I am eager to explore the structure of shared motion primitives and semantics in these datasets and investigate how to integrate them to achieve semantic-aware and robust manipulation in the real world.

-

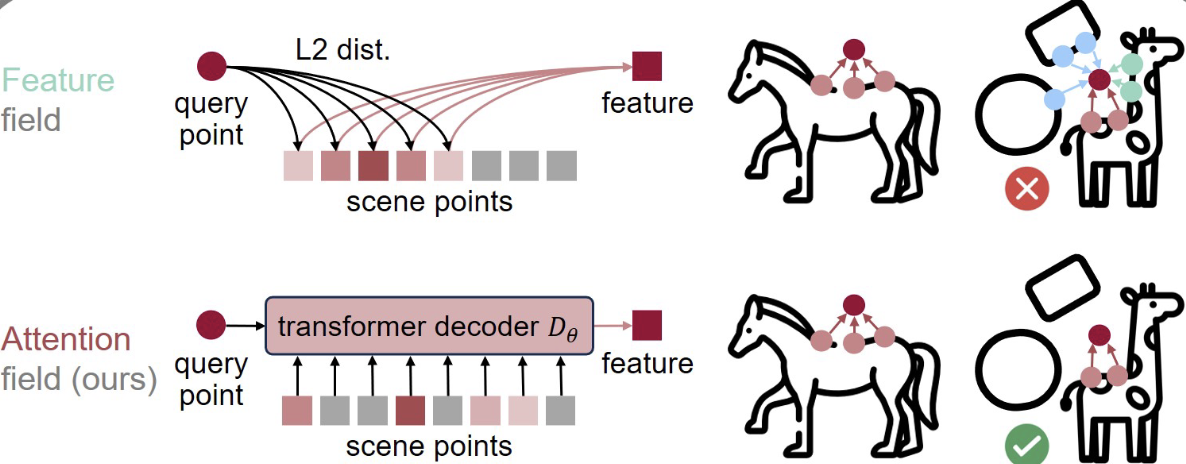

Shared foundations with scalable data sources. The vision domain offers rich semantic correspondences valuable for robotic perception, while natural language, as a natural carrier of reasoning and prompting, can enhance decision-making. I am excited to investigate the connections between robotics and scalable data sources by leveraging these shared foundations.

How can diverse sources of knowledge be effectively integrated?

- Structured Policy Design. Current policies (e.g. in IL/RL) directly map perception to actions of specific end-effector, which(i) process complex information without prioritization and (ii) limit the available data sources. In contrast, humans first reason about interactions visually, then adapt during manipulation using closed-loop feedback from multiple modalities (e.g., tactile, acoustic). I am excited to explore the design of a structured manipulation policy, including how to integrate the end-effector agnostic action representation from diverse data sources and when to incorporate multi-modal perception and close-loop control.

Feel free (and please do!) to reach out if you have any questions, comments about my research, or anything you’d like to discuss or share with me!